A perspective camera is just an idealized camera model used for our understanding of computer vision. For this, we will be using the frontal projection model (which is a bit different from what you learned awhile ago. Specifically that the projected plane is on the same side as the object.)

TLDR

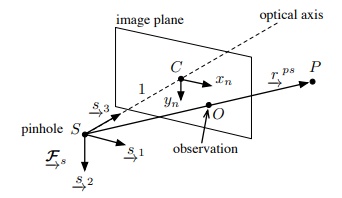

Given we have points in world frame and the transform between the camera and the world frame is given by

The pinhole camera model is defined as

Which expanded looks like

- is our camera intrinsic matrix

- is our horizontal focal length accounting for pixel size

- is our vertical focal length accounting for pixel size

- is our skew coefficient (usually 0)

- is the horizontal offset to center the image plane with the camera frame

- is the vertical offset to center the image place with the camera frame

- is our camera extrinsic matrix (chopped transformation matrix)

- is a valid rotation matrix

- is our transformation vector

- is the depth of the point in camera frame (must be divided out at the end to get our and ). The act of dividing out the depth is know as perspective projection

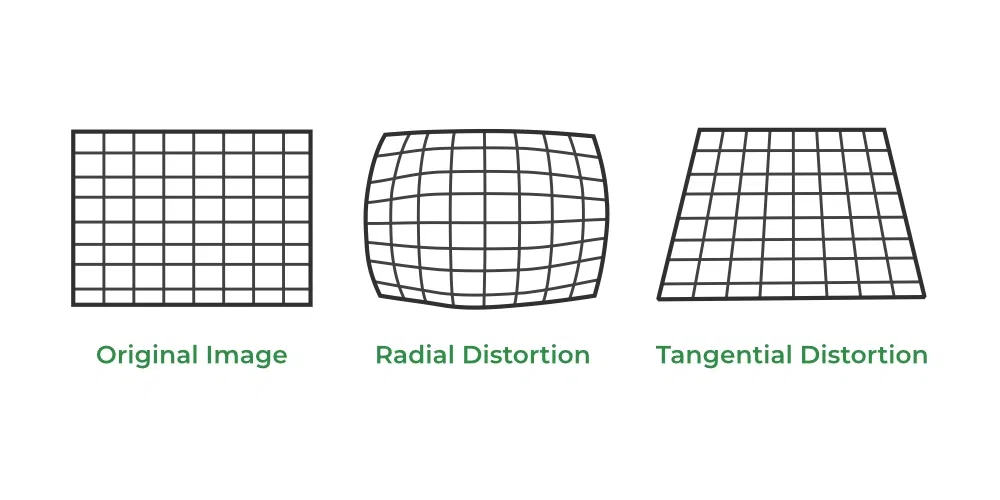

Accounting for Distortion

Distortion is a non-linear function, and there are number of different models for distortion.

Distortion is a non-linear function, and there are number of different models for distortion.

Brown-Conrady Distortion Model

AKA Plumb Bob Model

where

This is the distortion model used by OpenCV

However, most of the time OpenCV uses the standard 5-parameter, simplified model for most calibrations.

Adding Distortion into the model

We add in the distortion after the extrinsic and perspective transform, before passing through camera intrinsics

Explanation

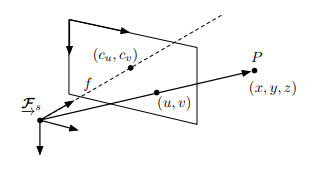

We have our pinhole and a point in 3D space, . The vector to the point from the pinhole is

important to note that the axis is normal to the image plane

The projected point which will end up on the plane is given by a vector

It is homogeneous to work with matrix calculations. This is called a normalized image coordinate

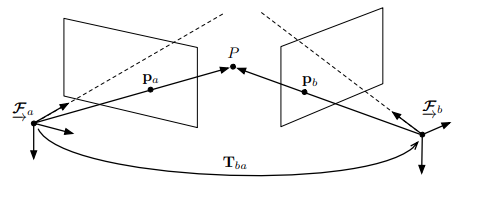

Essential Matrix

If the same point is collected by the same camera after a transformation, the two observations of the same point are related by

If the same point is collected by the same camera after a transformation, the two observations of the same point are related by

Where is called the essential matrix.

And its related to the pose of the camera

Lens Distortion

It exists, and we need to characterize it and deal with it, This affects how close a real camera is to our idealized model. But once it is characterized, we can run an undistortion procedure to get the camera image to something we can actually use.

Intrinsic Parameters

We were assuming right before this that the focal length is 1, so we actually need to deal with that (as well as map to pixel coordinates which start from the top left of the image)

We were assuming right before this that the focal length is 1, so we actually need to deal with that (as well as map to pixel coordinates which start from the top left of the image)

These have to be determined through camera calibration

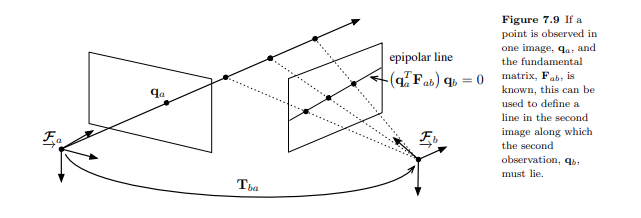

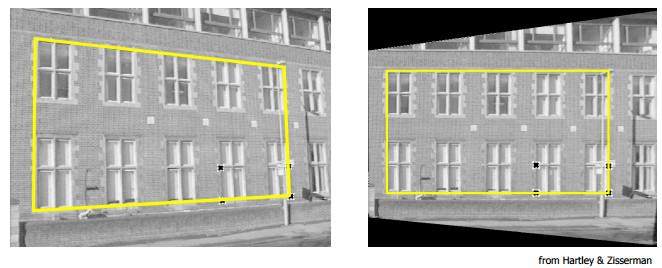

Fundamental Matrix

Similar to the Essential Matrix, but defined between two different cameras. Lets say we have two different cameras

The fundamental matrix, exists such that

Reasoning

The constraint associated with the fundamental matrix is also called the epipolar constraint

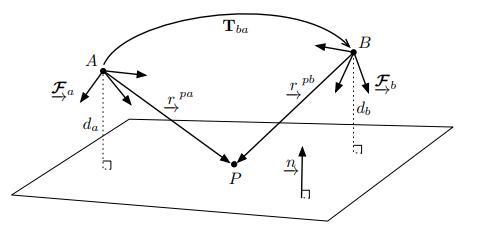

Homography

If an observed point is on a plane of known geometry, it is possible to work out what the point will look like on another camera of a known pose change. This is called homography.

Like before, we have two cameras

Say we know the equation of the Plane expressed in both camera frames to be

This implies that

Substituting our equations for

This implies that we can write the coordinates of with respect to any camera frame as

Extending this to our coodinates in the image plane, we get that

The Homography Matrix lets us determine the how a point in one image plane is gonna look like in another plane. Given that we know the geometry of the point

The Homography matrix is invertible