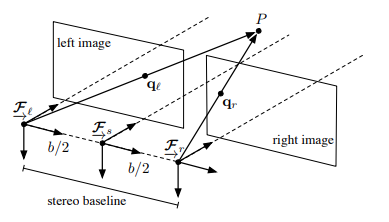

We express everything in a stereo camera with respect to the coordinate frame at the midpoint (known as the midpoint model).

We express everything in a stereo camera with respect to the coordinate frame at the midpoint (known as the midpoint model).

The model for the left and right camera are as follows

Assuming that the two cameras have the same intrinsic parameters

Stacking the two we get

You can also model the stereo camera with respect to the Left or right frames as well.

Left Model

The camera model becomes

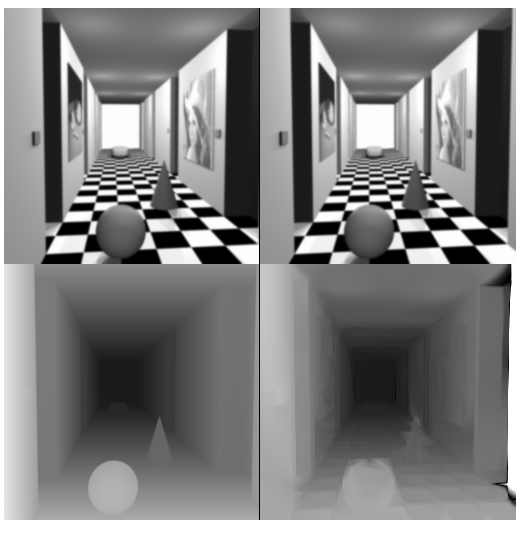

The thing about stereo cameras is that we know the distance between the two cameras and the intrinsics of both. Because they lie in the same plane (only offset by b), we can formulate a relationship between a Point , z value and disparity

but keep in mind that we dont know z, and we have to usually guess using correspondance.

This sensor model is just telling us how disparity relates to the position of the point.

(left, disparity if we know the geometry we are looking at)

(right, disparity that we guess from some form of correspondence algorithm)

(left, disparity if we know the geometry we are looking at)

(right, disparity that we guess from some form of correspondence algorithm)