The Bayes filter seeks to come up with an entire PDF to represent the likelihood of state , using only the measurements up to and including the current time. This is shown as the notation

Because NLNG Problem Statement is Markovian, we have independence between measurements, so we can factor out the current measurement.

If we integrate over all possible values of we get

Taking advantage of Markovian property again.

Which leads us to the Bayes Filter

This is nothing more than a mathematical artifact that provides a fundamental high-level reasoning for why these recursive state estimation filters work.

The Bayes filter tells us that optimal state estimation is a two-step recursive process:

- Prediction Step uses the motion model to propagate the prior belief forward

- Correction Step uses observations to refine our belief. The resultant final belief is a product of the two.

Key things to note

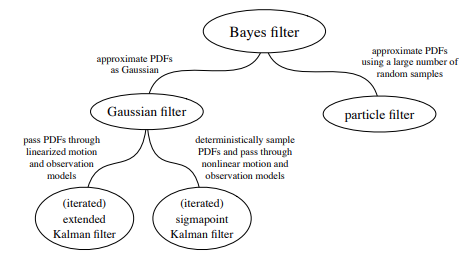

- PDFs live in infinite-dimensional space, and as such an infinite amount of memory is needed to represent our belief. To deal with this issue, we can

- approximate these PDFs as Gaussians, or

- using a finite number of random samples.

- The integral of the Bayes Filter is extremely computational expensive. To make this evaluation easier, we often

- linearize the motion and observation models

- employ monte-carlo integration

Its important to keep in mind that fundamentally these recursive algorithms, and what they strive for which is to better approximate the Bayes Filter, fall under one major assumption and that's that the state estimation problem is fundamentally a Markovian

One such method of trying handle these problems is the Extended Kalman Filter

This provides us a basis for deriving recursive filters that stem from the Generalized Gaussian Filter as well as Particle Filter